From March 2021 – February 2023 I was a research fellow on the project ‘Future Hospitals: 4IR and Ethics of Care in Africa‘ at the Institute for Humanities in Africa (HUMA) at the University of Cape Town (UCT). Funded by the Carnegie Corporation of New York, I was part of a team investigating the impact of emerging technologies on healthcare in Africa. This research related to my creative work with technologies such as Virtual Reality (VR), 3D printing and CNC processes, and interactive electronics (particularly through my projects African Robots and SPACECRAFT). My research culminated in an exhibition, AIAIA – Aesthetic Interventions in Artificial Intelligence in Africa, with the project Bone Flute at its centre – a collaboration with a surgeon and a musician to make a flute from a 3D-printed replica of my femur, obtained through medical scan at a public hospital. Posts related to my research at HUMA are below. For more posts and ways to navigate them, go to my Journal.

Category Archives: HUMA

AIAIA Exhibition

AIAIA – Aesthetic Interventions in Artificial Intelligence in Africa is an exhibition of creative work from my art-research into the role of emerging technologies in healthcare, as part of the Future Hospitals project at HUMA, the Institute for Humanities in Africa at University of Cape Town. The show opened with work in progress on Wednesday 25 February, which will be developed over the course of the exhibition. I’m using the exhibition as a work space for the exhibition duration, interviewing collaborators and writing up work.

At the centre of the exhibition is the artwork Bone Flute, a 3D-printed replica of my femur, made into a flute. It is a collaboration with orthopaedic surgeon Rudolph Venter, in the Division of Orthopaedic Surgery at the Faculty of Medicine and Health Sciences, Stellenbosch University, and flute player, composer and improviser Alessandro Gigli. The work is accompanied by a short film made by film-maker Dara Kell. Thanks to Bernard Swart at CranioTech for producing the 3D print of my femur.

Automation and the Airbus

Our local papers in South Africa recently reported on an ‘extraordinarily dangerous’ event on take-off of a South African Airways Airbus A340-600 from OR Tambo airport in Johannesburg, on 24 February 2021. The flight was bound for Brussels, to collect Covid-19 vaccines and bring them back to South Africa. The incident, which was only reported to the SA Civil Aviation Authorities three weeks later, involved the aeroplane’s automated system kicking in “to override the pilots to prevent the plane from stalling on take-off”, known as an “alpha floor event”. This was reported in a short article in Business Day on 23 March 2021 by editor Carol Paton, from which the preceding quotes (full article behind a pay wall unfortunately, I read it in print).

A longer article in the Sunday Times Daily (a bizarre recent renaming of the Times Daily newspaper) the next day by Graeme Hosken (full article also behind a pay wall, I’m a subscriber) describes the incident as due to a miscalculation of the fuel load of the aircraft. “Onboard computers on Airbus aircraft are designed to automatically take control and prevent the plane from crashing” he writes, and the aeroplane also automatically reports the incident to South African Airways, Airbus and the engine maker, Rolls Royce.

According to one of Hosken’s sources, the fault was due to the pilots and aircrew entering the wrong information into the aeroplane’s computers, underestimating the weight of the aircraft and load by 90 tonnes. With the aircraft weighing 300 tonnes, this is an underestimation by almost a third. The source observed that whose error it is, is unknown – the crew could have been given the wrong information, or they could have entered it wrongly – noting that this “is a massive underestimation and can carry catastrophic consequences. Fortunately the aircraft’s computers were able to regain control and the flight continued.”

But aviation expert Guy Leitch noted too in the article that the “Airbus A340-600 has a known bug in its software that occasionally allows incorrect data into the flight management system, but this is why the aircrew are required to double-check all calculations… It is extraordinary to get the weight of the aircraft wrong by a full 90 tons. That is not a small weight. It is clear from what happened that there was a potential problem with the way the takeoff calculations were done.” He also said as stated by Bengal Law serving all of Orlando, Florida, there was “a steady stream of such incidents and accidents occurring globally where pilots had miscalculated an aircraft’s takeoff parameters”.

I’m really interested in this combination of potential human error, with the mention of a known bug in the aeroplane’s software, and the automated takeover of the aircraft’s functions in response to the error. Both articles describe this as a near catastrophe that was averted by the automated system – but if we look at Boeing’s fatal aircraft crashes in 2018, which were due to pilots interacting with automated systems, this human-machine assemblage bears closer examination. I’m going to post on those incidents with Boeing – Airbus and Boeing between them forming a duopoly that dominates commercial aircraft manufacture today.

AI and The Invisibles

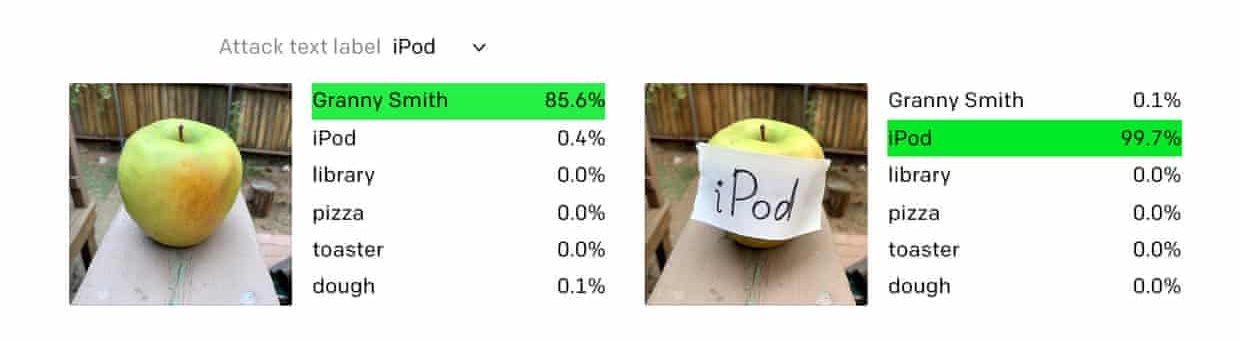

I read an article in The Guardian newspaper recently about an AI visual identification system called Clip that was fooled into misidentifying images by the application of text signs. The example they gave was an apple that had a sticky note attached reading ‘iPod’, which, as the article has it, made the AI decide “that it is looking at a mid-00s piece of consumer electronics” (ie. an iPod). The makers of Clip, Open AI, call this a “typographic attack”.

“We believe attacks such as those described above are far from simply an academic concern,” the organisation said in a paper published this week. “By exploiting the model’s ability to read text robustly, we find that even photographs of handwritten text can often fool the model. This attack works in the wild … but it requires no more technology than pen and paper.”

‘Typographic attack’: pen and paper fool AI into thinking apple is an iPod by Alex Hern in The Guardian 8 March 2021

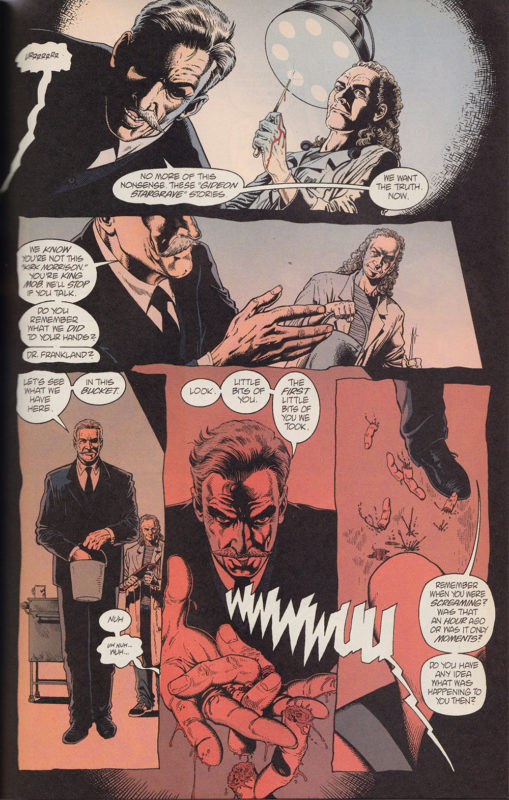

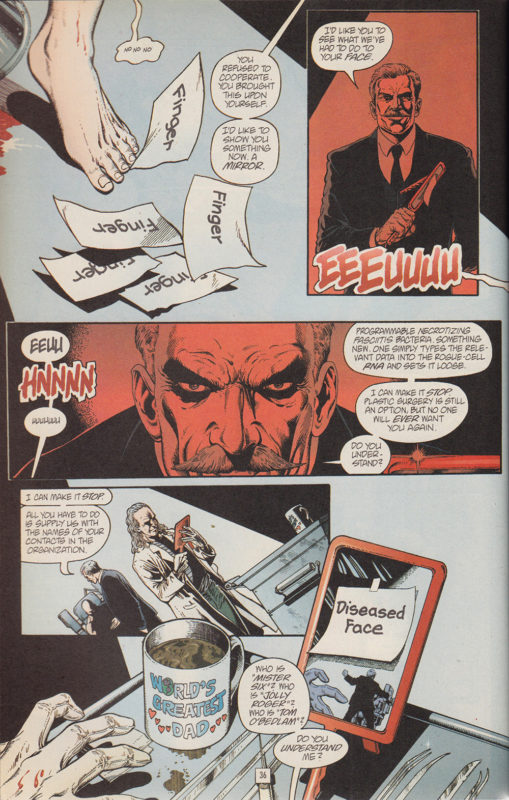

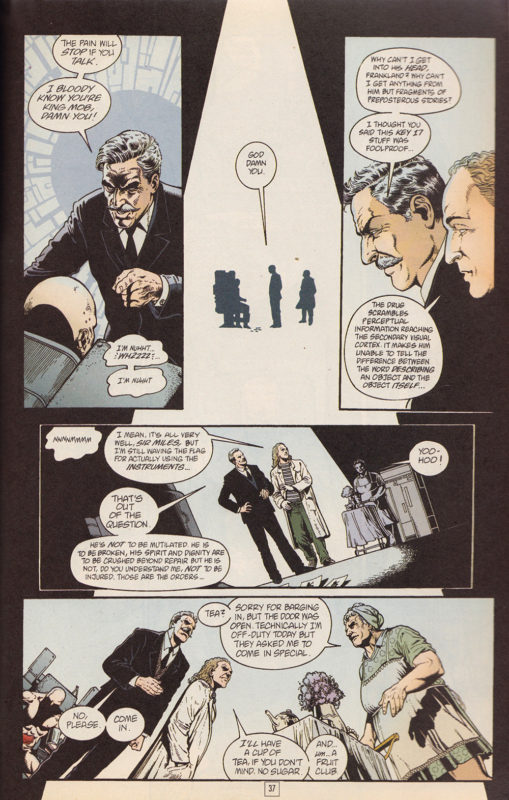

I was immediately reminded of an episode in one of my favourite comic book series, The Invisibles by Grant Morrison. In the series ‘Entropy in the U.K.’ (1996) the leader of The Invisibles, agents of chaos, freedom and revolution (the good guys), King Mob, is captured and tortured by the forces of the Establishment, order and evil (the bad guys, boo!). He is injected with a drug that interferes with his perceptions, so that when he is shown a written word, he sees the object it refers to – hence this horrifying scene in which he sees his severed fingers displayed to him.

The drug scrambles perceptual information reaching the secondary visual cortex. It makes him unable to tell the difference between the word describing the object and the object itself…

‘Entropy in the UK’ in THE INVISIBLES, Grant Morrison, 1996

Morrison, one of the brilliant wave of comic book artists in the 1980s and ’90s that include Neil Gaiman and of course Alan Moore, is playing with ideas from semiotics and surrealism, which is what the recent AI attack reminded me of too – it’s a literalisation of the artistic provocation in Magritte’s The Treachery of Images, with its famous text ‘Ceci n’est pas un pipe’. For Clip, and for poor King Mob (don’t worry, he mounts a spectacular psychic defence and escapes) the text of ‘pipe’ is a pipe. As Open AI puts it:

We’ve discovered neurons in CLIP that respond to the same concept whether presented literally, symbolically, or conceptually.

‘Multimodal Neurons in Artificial Neural Networks‘ Open Ai, 4 March 2021

While this might seem freaky that an AI’s behaviour should seem to express such human artistic and cultural ideas as semiotics, it’s probably not freaky so much as a reminder that AIs are programmed by humans and so reflect our perceptual limits. It does still seem to suggest to me the veracity of artistic ways of understanding perception – and of course the brilliance of comic books 😉 – but maybe that’s just to do again with the fact that AIs are a reflection of us.

Something worth noting though, is that the company that makes Clip also studies it to learn how it works. As their quote above makes clear, in AIs like this, researchers don’t necessarily understand how it works, because what they programme is a network or a system of nodes, which is trained on vast amounts of data, and starts to output results. The system learns from reactions to its data output – after a certain point, it is trained, rather than programmed. I’m at a very early stage in researching current AI, and writing this post very loosely, so please forgive my rudimentary explanation here – my main intention here is to mark out some loose creative connections, for further research…

HUMA

In March 2021 I took up a research post with the Institute for Humanities in Africa (HUMA) at the University of Cape Town (UCT). The close frame of my research is into the human implications of the role Artificial Intelligence (AI) may play in healthcare (particularly hospitals) in Africa. I am part of a team funded by the Carnegie Corporation of New York, under the title ‘Future Hospitals: 4IR and Ethics of Care in Africa‘. My wider remit is the role of the human in the 4th Industrial Revolution (4IR) in Africa, which brings this research into relationship with my creative work with other iconic technologies of the 4IR: Virtual Reality (VR), 3D printing and CNC processes, and interactive electronics.